Gemini API: Ensuring Responsible Use of Generative AI

In the ever-evolving landscape of artificial intelligence, the Gemini API emerges as a robust suite of tools designed for generative AI and natural language processing (NLP). These capabilities are accessible via the Gemini API or through the Google AI Studio web application, and they enable developers to harness the power of large language models (LLMs) for a variety of applications. However, with this power comes a responsibility to use these technologies ethically and safely.

Understanding the Limitations of Generative AI

While the Gemini API boasts impressive capabilities, it is essential to acknowledge the inherent risks associated with generative AI models. These models can produce outputs that are not only inaccurate but may also reflect biases or generate offensive content. To mitigate these risks, developers are encouraged to implement rigorous post-processing and manual evaluations of the outputs. The Gemini API is designed with built-in content filters and adjustable safety settings to provide a baseline level of protection against undesirable outputs.

Key Safety Considerations

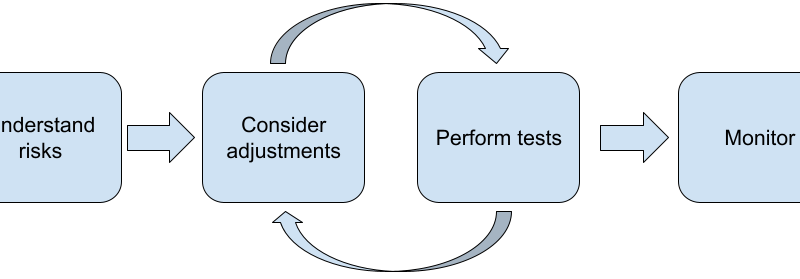

As you embark on developing applications utilizing the Gemini API, understanding the safety risks is paramount. Safety, in this context, refers to the model’s ability to avoid causing harm through toxic language or the perpetuation of stereotypes. Here are some critical steps to consider:

- Assess Safety Risks: Analyze the potential harm your application could cause, taking into account the context in which it will be used.

- Implement Mitigation Strategies: Develop strategies to address identified risks, which may include tuning model outputs or adjusting input methods.

- Conduct Thorough Testing: Employ safety testing tailored to your application, including safety benchmarking and adversarial testing, to identify vulnerabilities.

- Gather User Feedback: Create channels for users to provide feedback on their experiences, helping you spot issues that may not have been evident during testing.

Assessing Potential Risks

When evaluating your application, consider the likelihood and severity of potential risks. For instance, an app designed to generate factual essays will require stringent measures to avoid misinformation, in contrast to a tool for crafting fictional stories. Engaging with a diverse group of potential users can provide valuable insights into the risks associated with your application.

Mitigation Strategies

Once you’ve identified the risks, prioritize which ones to address and explore possible mitigations. Simple adjustments can often yield significant improvements in safety. For instance:

- Modify model output to align with acceptable standards for your specific application.

- Utilize input methods that encourage safer outputs, such as offering curated prompts or suggestions.

- Implement filters to block unsafe content before it reaches users.

- Employ trained classifiers to flag potentially harmful inputs.

Testing for Safety

Robust testing is vital for ensuring the reliability of AI applications. The scope of testing will vary significantly based on the application’s context and intended use. For example, a light-hearted poetry generator may not present the same risks as a legal document summarizer. Key testing strategies include:

- Safety Benchmarking: Develop metrics to evaluate how well your application meets safety standards during real-world usage.

- Adversarial Testing: Actively challenge your application with inputs designed to uncover weaknesses, thereby enhancing its robustness.

Monitoring and Feedback

No matter how thorough your testing, it is impossible to guarantee complete safety. Therefore, establishing a proactive monitoring system is crucial. User feedback mechanisms, such as rating systems or surveys, can help identify issues as they arise. Engaging in continuous dialogue with users will not only improve safety but also enhance the overall user experience.

Next Steps

To effectively utilize the Gemini API, familiarize yourself with the available safety settings and guidelines for prompt creation. By prioritizing safety and responsibility in your AI applications, you can harness the transformative potential of generative AI while minimizing risks to users.