Apple’s Voice-to-Text Feature Causes Stir with Unintended ‘Trump’ Substitution

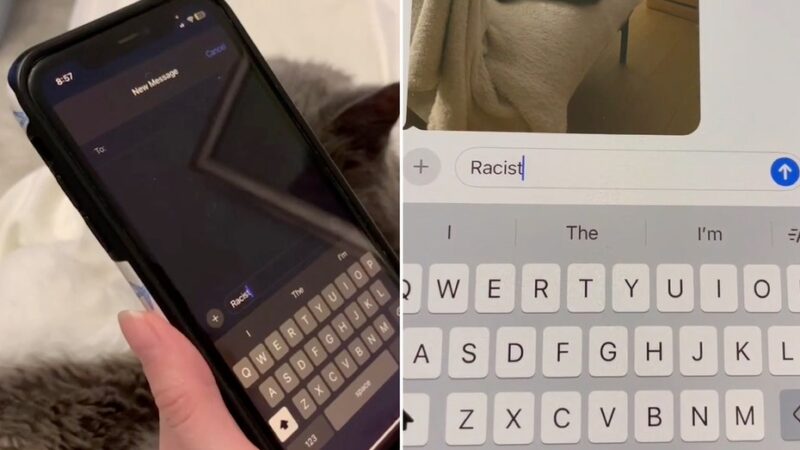

Apple’s iPhone has recently found itself at the center of a heated debate over its voice-to-text functionality. This controversy ignited after a TikTok video went viral, showcasing a user attempting to dictate the word “racist,” only for the feature to initially display “Trump” before reverting back to the intended word.

Reproducing the Incident

Fox News Digital conducted tests and was able to replicate the peculiar behavior of the voice-to-text dictation feature multiple times. During these tests, the word “Trump” briefly appeared when the user said “racist,” mirroring the viral TikTok clip. Interestingly, this substitution did not occur consistently, with the feature often correctly identifying “racist.” However, it also displayed random words like “reinhold” and “you” in some instances.

Apple Responds to Concerns

In response to the backlash, an Apple spokesperson acknowledged the issue on Tuesday, stating, “We are aware of an issue with the speech recognition model that powers Dictation, and we are rolling out a fix as soon as possible.” The spokesperson explained that the speech recognition system can sometimes misinterpret words with phonetic similarities, particularly those containing the “r” consonant.

A Pattern of Misinterpretation

This isn’t the first instance of technology creating a stir over perceived political bias. Earlier this year, a video surfaced showing Amazon’s Alexa assistant providing different responses when asked about voting for Kamala Harris compared to Donald Trump. In that case, Alexa declined to provide reasons for voting for Trump but offered a detailed response for Harris, prompting scrutiny from lawmakers.

Amazon’s Quick Resolution

After the viral video highlighting Alexa’s bias, Amazon promptly addressed the issue. Within just two hours of the video’s release, the company implemented a manual override to ensure that Alexa would provide balanced responses regarding all political candidates. Prior to this, the assistant had only been programmed with manual overrides for President Biden and Trump, with Harris being overlooked due to lower user inquiries.

Continued Accountability in Technology

During a briefing with House Judiciary Committee staffers, Amazon representatives expressed regret over the incident, emphasizing their commitment to maintaining neutrality in Alexa’s responses. They acknowledged that the company had fallen short of its policy to prevent the assistant from displaying any political bias. In light of the recent controversies, Amazon has since conducted a thorough audit of its systems, ensuring that manual overrides are now in place for all candidates and a variety of election-related prompts.

In conclusion, as technology continues to evolve, the importance of addressing biases in AI systems remains paramount. Both Apple and Amazon are taking steps to rectify these issues, but ongoing vigilance is needed to ensure that their products serve users without exhibiting unintended political leanings.